.jpg)

Advanced Research

9 Essential Questions for Evaluating Employee Satisfaction Software

February 20, 2026

Employee Research

Articles

.jpg)

Advanced Research

9 Essential Questions for Evaluating Employee Satisfaction Software

February 20, 2026

Employee Research

Articles

Advanced Research

How to Evaluate Market Research Vendors for Global Reach

Team Remesh

February 10, 2026

Market Research

Articles

Advanced Research

How to Evaluate Market Research Vendors for Global Reach

Team Remesh

February 10, 2026

Market Research

Articles

Advanced Research

3 Early-Stage Research Methods to Gather Consumer Insights

Team Remesh

January 27, 2026

Market Research

Articles

Advanced Research

3 Early-Stage Research Methods to Gather Consumer Insights

Team Remesh

January 27, 2026

Market Research

Articles

.avif)

Advanced Research

Why Agencies Should Embrace AI Tools for Market Research

Team Remesh

January 26, 2026

Articles

.avif)

Advanced Research

Why Agencies Should Embrace AI Tools for Market Research

Team Remesh

January 26, 2026

Articles

Advanced Research

The Top Market Research Companies for the CPG Industry

Team Remesh

January 20, 2026

Market Research

Articles

Advanced Research

The Top Market Research Companies for the CPG Industry

Team Remesh

January 20, 2026

Market Research

Articles

Advanced Research

The Most Cutting-Edge Consumer Insights Software of 2026

Team Remesh

January 5, 2026

Market Research

Articles

Advanced Research

The Most Cutting-Edge Consumer Insights Software of 2026

Team Remesh

January 5, 2026

Market Research

Articles

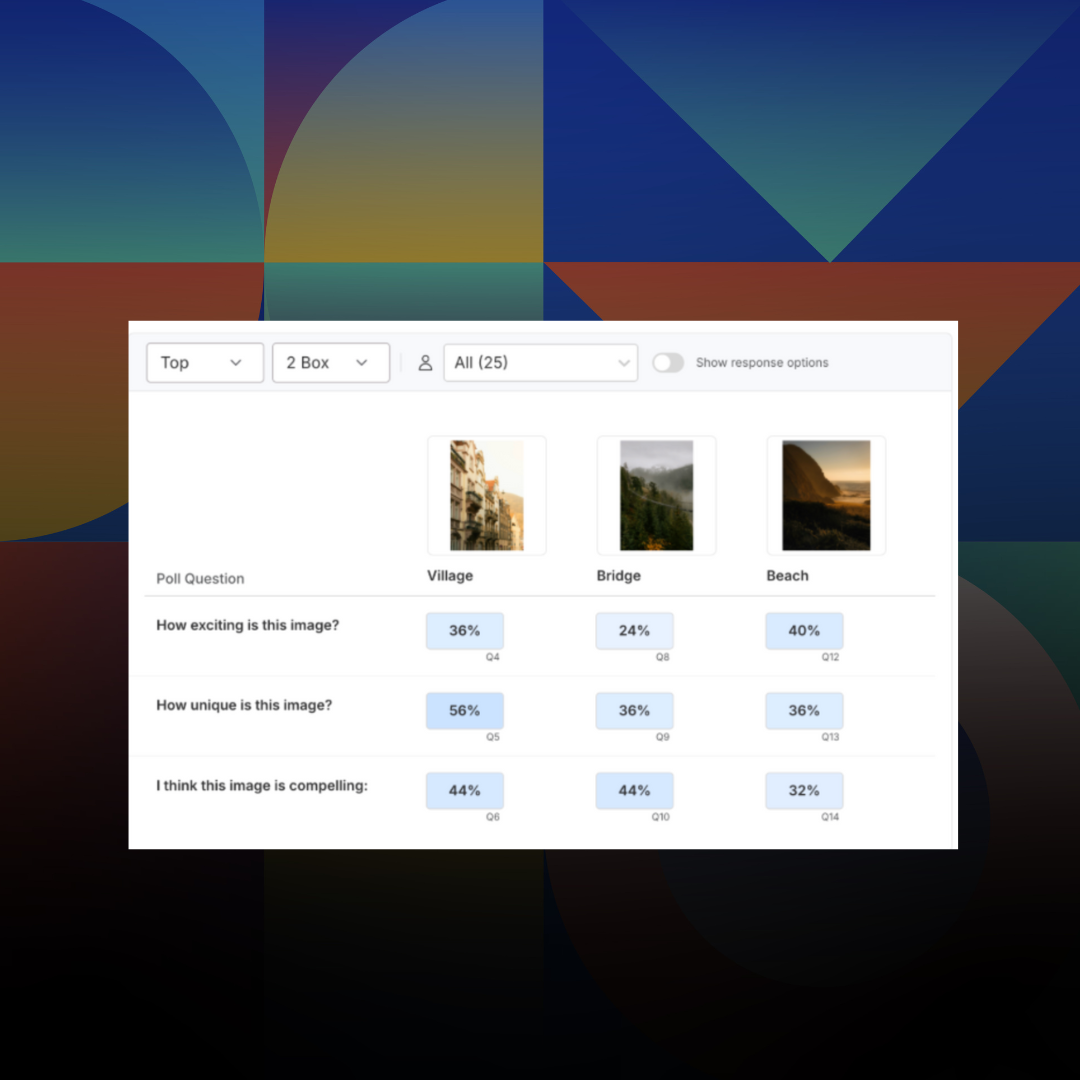

Research 101

Introducing: Poll Comparison - Streamline Concept Testing and Make Better Decisions Faster

Emmet Hennessy

November 24, 2025

Market Research

Articles

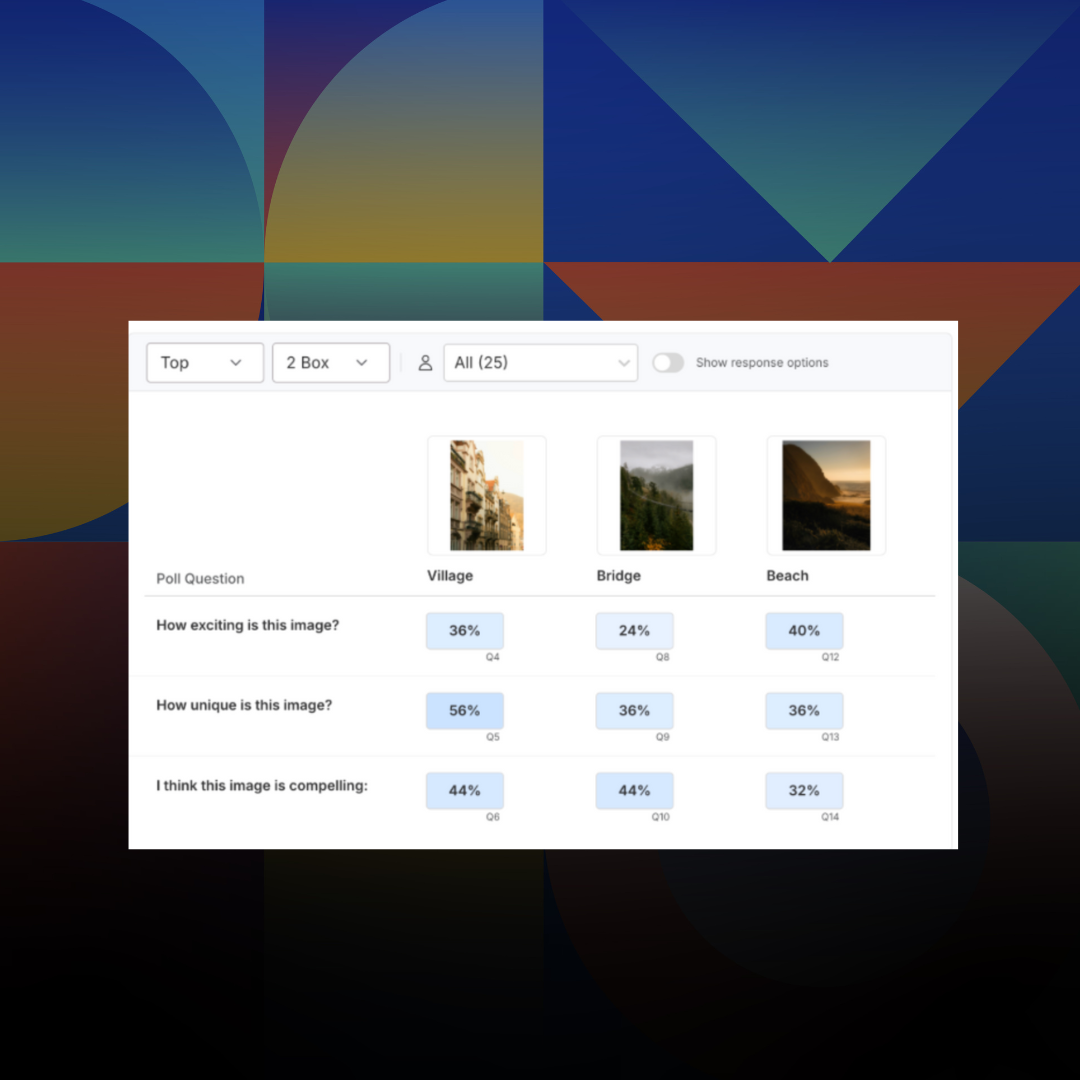

Research 101

Introducing: Poll Comparison - Streamline Concept Testing and Make Better Decisions Faster

Emmet Hennessy

November 24, 2025

Market Research

Articles

AI

Purpose-Built for Research AI is Here - a Letter from the CPO

Jessica Dubin

October 21, 2025

Market Research

Articles

AI

Purpose-Built for Research AI is Here - a Letter from the CPO

Jessica Dubin

October 21, 2025

Market Research

Articles

Advanced Research

Unlock Agile Global Insights: Using Remesh Multi-Language Conversations for Research Across Audiences

Customer Success Team

October 14, 2025

Market Research

Articles

Advanced Research

Unlock Agile Global Insights: Using Remesh Multi-Language Conversations for Research Across Audiences

Customer Success Team

October 14, 2025

Market Research

Articles

Top 11 A.I. Ethics Dilemmas Affecting Tech Right Now

Artificial intelligence presents many ethical dilemmas that affect the tech industry right now. Here are the top 11 ethics issues.

The time to talk about ethical issues in A.I. is now. While machine learning is not a new tech development, the ethical questions and dilemmas surrounding artificial intelligence are at their most historically pressing moment. There are multiple ethical questions to confront, including the comprehensive list of moral principles and issues below.

Using Artificial Intelligence with Immoral Intent

1. Economic Inequality and Labor

Within a population, the first concern that arises when technological advancement nears is job security. As with the original industrial revolution, what terrifies workers is the scalable automation of labor.

During the first industrial revolution, the U.S. government pushed college education for the first time in history. The issue for Americans then was not the cost of college, but the cost of pausing labor to earn a degree. It’s likely that this push for higher education was only successful because the automation of old jobs made “professional” labor (which often required a degree of higher education) an obvious alternative.

Two scenarios that exist in this particular A.I. ethics dilemma:

- Preventing Inhumane Practices.

You could argue that it’s barbaric to require human labor in factories and that the next phase of automation through artificial intelligence (the fourth industrial revolution) could enable workers to earn a college degree. Or, that the government might implement universal income to care for displaced workers. - Contributions to Inequality.

Alternatively, the government could decline universal income as a socialist policy, and the student loan crisis could deter displaced laborers from obtaining further education, effectively sacrificing human life for technological advancement - or, more specifically, contributing to cyclical poverty and income equality.

2. Influence of Human Behavior

Whether we know it or not, people are increasingly interacting with machines to complete day-to-day tasks - this poses an issue of transparency, but also of behavioral influence. Google Duplex, for example, uses an A.I. system that sounds like a live person to make restaurant reservations over the phone. The system can respond to questions on the fly and is mostly indistinguishable from a real person.

While human attention and patience are limited, the emotional energy of a machine is not - rather, a machine’s experience of limitations is technical. Although this could benefit certain fields like customer service, this limitless capacity could create human addiction to robot affection - according to A.I. experts like Stephen Wolfram (whose inventions power a piece of iPhone’s Siri), this idea is not entirely unrealistic. That makes Her, a film about a man who falls in love with a machine, more plausible than ever before.

Other not imitating an actual person, many apps are built with the intention of using algorithms to nurture addictive user behavior. Tinder, for example, is designed to keep users on the A.I.-powered app by instigating less likely matches the longer a user engages in a session.

3. Training Biases in Hiring and Crime

One of the most pressing and widely-discussed A.I. ethics issues is the training of bias in systems that involve predictive analysis, like hiring or crime. Amazon most famously ran into a hiring bias issue after training an A.I.-powered algorithm to present strong candidates based on historical data. Because previous candidates were chosen through human bias (favoring male candidates), the algorithm favored men as well.

In March, the NYPD disclosed that it developed Patternizr, an algorithmic machine-learning software that sifts through police data to find patterns and connect similar crimes, and has used it since 2016. The software is not used for rape or homicide cases and excludes factors like gender and race when searching for patterns.

Although this is a step forward from previous algorithms that were trained (and still operate) on a racial bias to predict crime and parole violation, actively removing bias from historical data sets is not standard practice. That means this trained bias is at best an insult and inconvenience; at worst, a risk to personal freedom and a catalyst of systematic oppression.

4. Misinformation and Fake News

A lesser-known A.I. ethics issue is the proliferation of deep fakes, a technique that uses A.I. to superimpose image, video, and audio onto others, creating a false impression of original media and audio, most often with malicious intent. Deep fakes can include face swaps, voice imitation, facial re-enactment, lip-syncing, and more. Unlike older photo and video editing techniques, deep fake technology will become progressively more accessible to people without great technical skills.

The technology first became accessible to the public, for example, with the launch of FakeApp, which easily allows users to swap faces with anyone - including celebrities and political figures.

According to experts like former Secretary General of NATO Nina Shick, the manipulation of images and videos through deep fakes could have catastrophic effects for global order - for example, a lip-synced video of Donald Trump announcing nuclear warfare - and as a result, the deepfake technology is largely censored by websites like Reddit.

Similar tech was used during the last U.S. presidential election when Russia implemented Reality Hacking (like the influence of fake news on our Facebook feeds). This information warfare is becoming commonplace and exists not only to alter facts but to powerfully change opinions and attitudes. This practice was also used during the Brexit campaign and is increasingly being used as an example of the rising political tensions and confusing global perspectives.

Using artificial intelligence as an invasion of consumer privacy

5. Consumer Privacy and Security

Although there are some government regulations put in place to ensure consumer privacy (like the GDPR, a European Union data privacy law), the potential for AI to understand buyers on an intimate level is enormous. Some potential examples of how AI could breach privacy include:

- Data Exploitation:

Most consumer devices (from cell phones to blue-tooth enabled light bulbs) use artificial intelligence to collect our personal data in order to provide better, more personalized service. If consensual, and if the data collection is done with transparency, this personalization is an excellent feature. Without consent and transparency, this feature could easily become malignant. - Identification and Tracking:

Although a phone tracking app is useful after leaving your iPhone in a cab, or losing your keys between the couch cushions, tracking individuals could be unsafe for at a small scale (like domestic abuse survivors seeking privacy) or at a large scale (like government compliance) a la Big Brother. - Voice and Facial Recognition:

Like the features before it, voice and facial recognition are a convenient feature implemented in everyday consumer devices. On the iPhone, for example, a user can show their face to unlock their phone instead of typing in a pass code. Alternatively, facial recognition could be used to track and control minorities, as China does with Uighurs, a Muslim minority.

6. Global Security and Intelligence

Similar to the risks of international misinformation (like fake news reports), the ethical risks of A.I. in this capacity are colossal. While replacing human soldiers with artificially intelligent robots, or creating autonomous weapons, would potentially reduce the risk of harm a defensive government power might cause, artificial intelligence poses an ethical dilemma comparable to the nuclear bomb.

Although international leaders rarely speak of the role of artificial intelligence in nuclear weapons testing, companies like Microsoft have quietly announced their willingness to sell advanced A.I. systems to the Pentagon. Both Moscow and Beijing have also suggested the use and testing of autonomous tanks that pose enormous risks to A.I. ethics guidelines.

Using artificial intelligence to reduce consumer agency

7. Singularity, or the robot overlords

Like a nightmarish episode of The Jetsons, the idea of the robot overlord looms in consumer minds as the most obvious A.I. ethical dilemma. According to Nick Bostrom, a superintelligence expert at University of Oxford, it’s possible that the malicious robot overlords of our imaginations could be a reality if future A.I. systems hold values that don’t align with human values. Who are we as humans without autonomy? Who are robots when they’re given autonomy? And finally, how do we define each when given the same freedom and intelligence?

According to Ray Kurzweil, futurist and Chief Engineer at Google, singularity is closer than we think. Kurzweil predicts we will merge human intelligence with artificial intelligence by 2045, which he suggests will make us even more intelligent - something to look forward to, not fear.

8. Humane Treatment of AI

Should A.I. systems (or robots) have rights? If Kurzweil is correct, the humane treatment of A.I. may simply mean the humane treatment of humans. Currently, some artificially intelligent systems even have citizenship, like Sophia (a humanoid made by Hanson Robotics).

Artificial intelligence and unpredictable secondary events

9. Mistakes Made By A.I.

If there is artificial intelligence, there is also artificial stupidity. Currently, A.I. systems are trained on patterns in certain examples, and because all possible examples can not be covered, the systems are easily confused when presented with a new scenario. This is because A.I. systems don’t yet have the ability to recognize likenesses as humans do, as in pattern recognition when A.I. systems are confronted with scenarios that differ from the examples used in training, and the systems cannot contextualize the situation.

If A.I. systems are easily fooled, that means they’re vulnerable to influence from nefarious parties, as in cases of adversarial attacks in machine learning. An algorithm, for example, might read fake news from an influential account and popularize it based on optimized content factors rather than fact-checking the news source or idea like a journalist might before publishing it.

10. Regulation of A.I.

At large, the tech industry is most controversial for lacking regulation - in part because the industry changes so rapidly that it’s difficult to understand where law enforcement should begin, and in part because the culture of tech runs on the same dreams of the Wild West. That is, on innovation at any cost.

There have been attempts at self-regulation - like at Google, which put together an ethics board internally only to dismantle it shortly after because of its controversial members, including those who deny climate change and hold anti-immigrant sentiments. Similarly, Microsoft has vocally encouraged Washington to regulate A.I. systems, especially facial recognition software; however, the company’s relationship with lawmakers has been pointed out as too tight knit by competitors.

Meanwhile, A.I. systems continue to be developed and deployed almost completely devoid of regulation.

11. If Moral A.I. is Not Possible

Despite our best human efforts, it may be possible that A.I. will never be moral. The issues with training A.I. systems to act ethically include:

- assuming A.I. can be motivated to act ethically

- expecting universal ethics to emerge

- believing the humans training A.I. to be ethical are themselves ethical

Stephen Hawking, Bill Gates, and Elon Musk have all stated that superintelligence (which is more superficial than super in its current form) should be handled delicately.

Summing Up A.I. Ethics Dilemmas

Can A.I. even exist in an ethical state? Opinions differ, but the facts are clear. The time for A.I. ethical regulations is near, and remaining inactive will be our greatest folly...

Can’t get enough of artificial intelligence? Check out our eBook on the state of A.I. in market research.

{{cta('4924012b-eaba-41b9-87de-f1de423074bb','justifycenter')}}

-

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

-

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

-

More

.jpg)

Stay up-to date.

Stay ahead of the curve. Get it all. Or get what suits you. Our 101 material is great if you’re used to working with an agency. Are you a seasoned pro? Sign up to receive just our advanced materials.

.png)

.png)

.png)

.png)