.jpg)

Advanced Research

9 Essential Questions for Evaluating Employee Satisfaction Software

February 20, 2026

Employee Research

Articles

.jpg)

Advanced Research

9 Essential Questions for Evaluating Employee Satisfaction Software

February 20, 2026

Employee Research

Articles

Advanced Research

How to Evaluate Market Research Vendors for Global Reach

Team Remesh

February 10, 2026

Market Research

Articles

Advanced Research

How to Evaluate Market Research Vendors for Global Reach

Team Remesh

February 10, 2026

Market Research

Articles

Advanced Research

3 Early-Stage Research Methods to Gather Consumer Insights

Team Remesh

January 27, 2026

Market Research

Articles

Advanced Research

3 Early-Stage Research Methods to Gather Consumer Insights

Team Remesh

January 27, 2026

Market Research

Articles

.avif)

Advanced Research

Why Agencies Should Embrace AI Tools for Market Research

Team Remesh

January 26, 2026

Articles

.avif)

Advanced Research

Why Agencies Should Embrace AI Tools for Market Research

Team Remesh

January 26, 2026

Articles

Advanced Research

The Top Market Research Companies for the CPG Industry

Team Remesh

January 20, 2026

Market Research

Articles

Advanced Research

The Top Market Research Companies for the CPG Industry

Team Remesh

January 20, 2026

Market Research

Articles

Advanced Research

The Most Cutting-Edge Consumer Insights Software of 2026

Team Remesh

January 5, 2026

Market Research

Articles

Advanced Research

The Most Cutting-Edge Consumer Insights Software of 2026

Team Remesh

January 5, 2026

Market Research

Articles

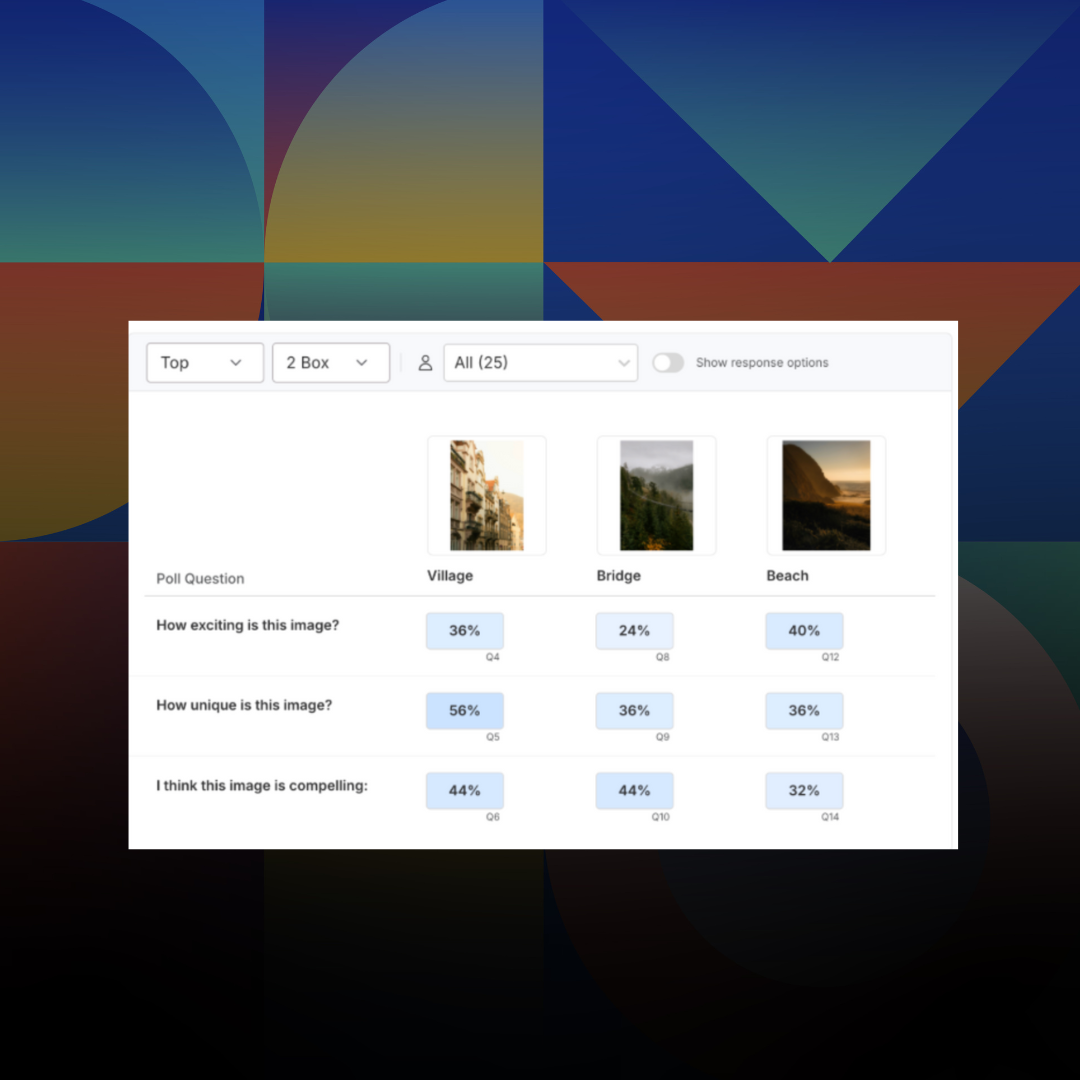

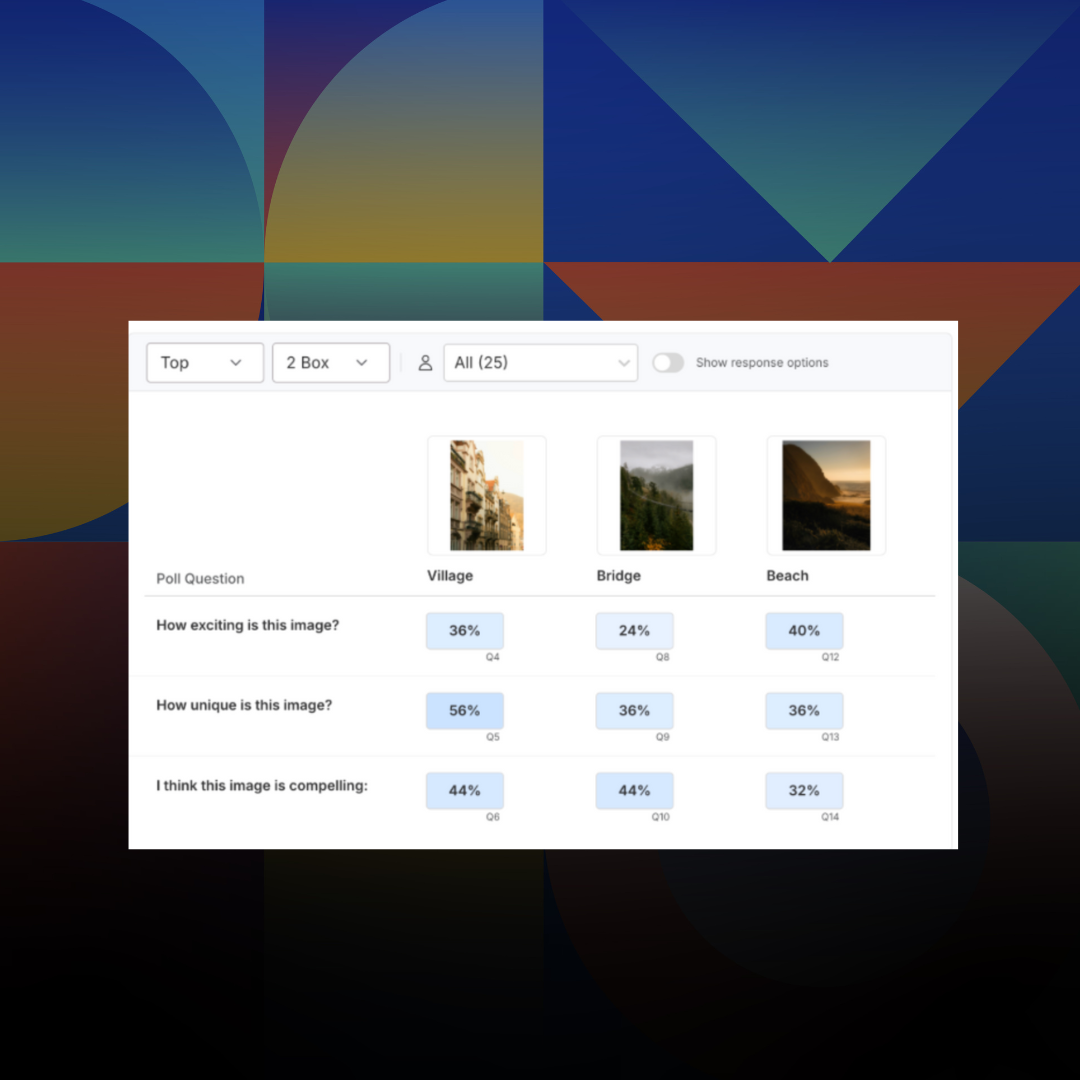

Research 101

Introducing: Poll Comparison - Streamline Concept Testing and Make Better Decisions Faster

Emmet Hennessy

November 24, 2025

Market Research

Articles

Research 101

Introducing: Poll Comparison - Streamline Concept Testing and Make Better Decisions Faster

Emmet Hennessy

November 24, 2025

Market Research

Articles

AI

Purpose-Built for Research AI is Here - a Letter from the CPO

Jessica Dubin

October 21, 2025

Market Research

Articles

AI

Purpose-Built for Research AI is Here - a Letter from the CPO

Jessica Dubin

October 21, 2025

Market Research

Articles

Advanced Research

Unlock Agile Global Insights: Using Remesh Multi-Language Conversations for Research Across Audiences

Customer Success Team

October 14, 2025

Market Research

Articles

Advanced Research

Unlock Agile Global Insights: Using Remesh Multi-Language Conversations for Research Across Audiences

Customer Success Team

October 14, 2025

Market Research

Articles

The Prompt Is the New Moderator Guide

The rise of generative AI has challenged nearly every assumption we hold about how qualitative research is done. This is especially true in analysis. We're now in an era where a well-constructed prompt can replace hours of thematic coding.

The rise of generative AI has challenged nearly every assumption we hold about how qualitative research is done. This is especially true in analysis. We're now in an era where a well-constructed prompt can replace hours of thematic coding.

For qualitative researchers, it’s especially helpful to think of prompting as a form of moderation. And just like traditional research moderators, AI prompts can be insightful, leading, biased, vague or masterfully structured.

What Is a Prompt?

A prompt is an instruction or question given to a large language model (LLM) like GPT, Claude, or Gemini that directs its analysis or output. In qualitative research, prompts translate the logic of inquiry into a format the language model can interpret. Strong prompts set context, define the model’s role, specify the scope of analysis, and include instructions to surface assumptions or bias. They serve as the researcher’s voice in guiding AI reasoning.

From Interviews to Instructions: How Prompting and Moderation Intersect

Classic qualitative methods rely on the art of conversation: eliciting, probing and following threads. The LLM prompt, by contrast, is sometimes imagined as a blunt instrument: a command to "summarize," "cluster," or "extract themes." But that view misses the relationship between human inquiry and machine processing capabilities. The most effective research organizations are discovering that successful AI integration requires the same strategic thinking that has always driven high-quality qualitative research. These include careful attention to framing, sequencing, and the psychological dynamics of inquiry.

A good prompt is akin to a moderator's guide in compressed form. It frames context, sets boundaries, specifies scope, and signals tone. This parallel is not coincidental. Both moderating and prompting are fundamentally about guiding a conversation toward meaningful insight.

Consider this comparison:

The LLM is not replacing qualitative inquiry. It is amplifying it, but only if we write prompts that reflect the logic of qualitative analysis and the goals of interpretation.

The Anatomy of a Strong Prompt

Strong AI prompts follow five key principles borrowed from decades of human-centered research practice:

1. Context-Aware

Always orient the model with key background information. "You are analyzing responses from a consumer sentiment study with 540 participants" is infinitely better than "analyze this data."

2. Role-Based

Frame the AI as a specific analyst or perspective. "Act as a qualitative researcher evaluating consumer responses" creates better boundaries than generic instructions.

3. Scope-Limited

Avoid open-ended "analyze everything" instructions. Be clear about what data to attend to and what outcomes you want.

4. Bias-Aware

Include checks like "List any assumptions or inferences you made in forming this summary." This mirrors the reflexivity we expect from human researchers.

5. Multi-Pass

Use chained prompts to refine, challenge, or contrast earlier interpretations. Just as we probe deeper in interviews, we should probe deeper with AI.

Real-World Prompting in Action

We interviewed expert qualitative researchers and learned about strategies they use. These practitioners have moved beyond simple "analyze this data" requests to develop nuanced approaches that leverage AI capabilities while maintaining the rigor that organizations require for strategic decision-making.

Researcher A. described their approach: "I compile my curated platform summaries, refined through multiple rounds of the Summarize feature, along with my analytical notes, selected quotes, and relevant data points. I then presented this comprehensive package to the LLM with clear parameters: 'Here's the same research study with identical questions asked across two different markets. Identify the top five biggest similarities and differences between them.'"

This prompt works because it:

- Provides rich context (curated summaries + notes)

- Sets clear scope (two markets, same questions)

- Specifies output format (top 5 similarities + differences)

- Assumes comparative analysis capability

Researcher S. shared their systematic method: "I've developed a set of standardized prompts with specific refinements to ensure the AI synthesizes my input appropriately. I consistently specify present tense in my instructions, and I explicitly direct the model to avoid hyperbolic language. Without these guardrails, the AI tends to overstate participant sentiment, describing reactions as 'the most amazing thing', which ultimately compromises credibility in reporting."

Their prompts include guardrails like:

- "Use present tense"

- "Avoid hyperbole"

- "Use high quality business language if I want to make sure it's report-worthy"

Why Prompting Deserves to Be a Core Research Competency

Prompting is a methodological skill, not a technical one. It sits alongside interviewing, analysis, and synthesis as a core part of the researcher's toolkit. Organizations that recognize this shift early and invest in developing their teams' prompting capabilities will have significant advantages in generating actionable insights at scale.

This skill doesn't require a degree in computer science, but does require deep research judgment. These skills are the ones qualitative researchers have always relied on: understanding people, data, and the structures of meaning.

As Researcher B. noted: "I always provide the AI with directional context rather than starting from nothing. I'll present a specific research question and request, ‘Given this question, what would be a logical progression of related inquiries?' or 'What additional questions would complement this line of investigation?’ I avoid generic requests like asking the AI to simply ‘write me guide questions or provide insights’ without any qualifying framework."

A Practical Prompting Framework

Here's a tested framework you can use immediately:

You are a [ROLE] analyzing a dataset of [TYPE OF RESPONSES] from [AUDIENCE CONTEXT].

1. Identify the top 3–5 themes expressed across responses.

2. For each theme, summarize how it was expressed and quote one representative verbatim.

3. Note any emotional tones or cultural references present.

4. What assumptions did you make in your analysis? What information might you be missing?

This isn't just good prompting hygiene, it's good research practice.

The Remesh Advantage: Prompting Meets Participatory Validation

While any researcher can grow in their ability to write prompts, real power emerges in combining smart prompts with rich, validated data. This unlocks rapid discovery of actionable insights without sacrificing the data quality and trustability that is critical to stakeholders.

Structured for AI Success: Remesh data comes pre-formatted with the context AI models need, including participant demographic segments, sentiment labels, agreement percentages, themes, and summaries. This means your prompts can be more specific and your outputs more reliable.

Human Validation Built-In: Remesh open-end responses include participant-supplied validation through voting. When you prompt AI to analyze themes, you're working with signals that real people have already endorsed, not just algorithmic clustering.

Upcoming Remesh Agent: Remesh Agent is coming soon to empower users to get direct answers to their data questions, and so much more. Imagine being able to ask "Compare how Gen-Z and Millennials talked about trust in healthcare" directly within our research platform, without having to wrangle CSV exports into an LLM.

The Skills Transfer Is Real

Many researchers worry that over use of AI might detract from their expertise. The opposite is true: the core strengths that make great moderators also make great prompt engineers.

- Knowing when to probe deeper → Chaining follow-up prompts

- Maintaining neutrality → Writing bias-aware instructions

- Managing conversation flow → Structuring multi-step analysis

- Contextualizing responses → Providing rich background information

Beyond the Prompt: What's Next

As AI capabilities evolve, the researchers who thrive will be those who master the art of human and AI collaboration. This means:

- Developing prompt libraries for common research tasks

- Creating validation workflows that combine AI speed with human judgment

- Building feedback loops that improve both prompts and outputs over time

- Training teams on prompt engineering as a core competency

The future of qualitative research is not about finding shortcuts. It’s about amplifying human insight through intelligent collaboration with AI. Prompting, done well, is moderation at scale. Research organizations that see AI as more than a supplementary tool will lead their industries in discovering insights that are both deeply human and scalable.

Ready to Master AI-Powered Research?

Explore how Remesh combines AI-ready data collection with advanced analysis capabilities. Discover why leading brands trust Remesh for AI-augmented insights - get a custom demo.

-

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

-

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

-

More

.jpg)

Stay up-to date.

Stay ahead of the curve. Get it all. Or get what suits you. Our 101 material is great if you’re used to working with an agency. Are you a seasoned pro? Sign up to receive just our advanced materials.

.png)

.png)

.png)

.png)